By

Rob Dwyer

|

Date Published: March 08, 2023 - Last Updated July 12, 2023

|

Comments

Late last month, my wife had a business event in Las Vegas, so I tagged along for a few days. Our stay was at a well-known hotel brand that focuses on business travelers rather than tourists. As we waited for the elevator, we saw a "Fun Facts" display on a table directly across from the elevators. If any town has some fun facts, it has to be Las Vegas, right?

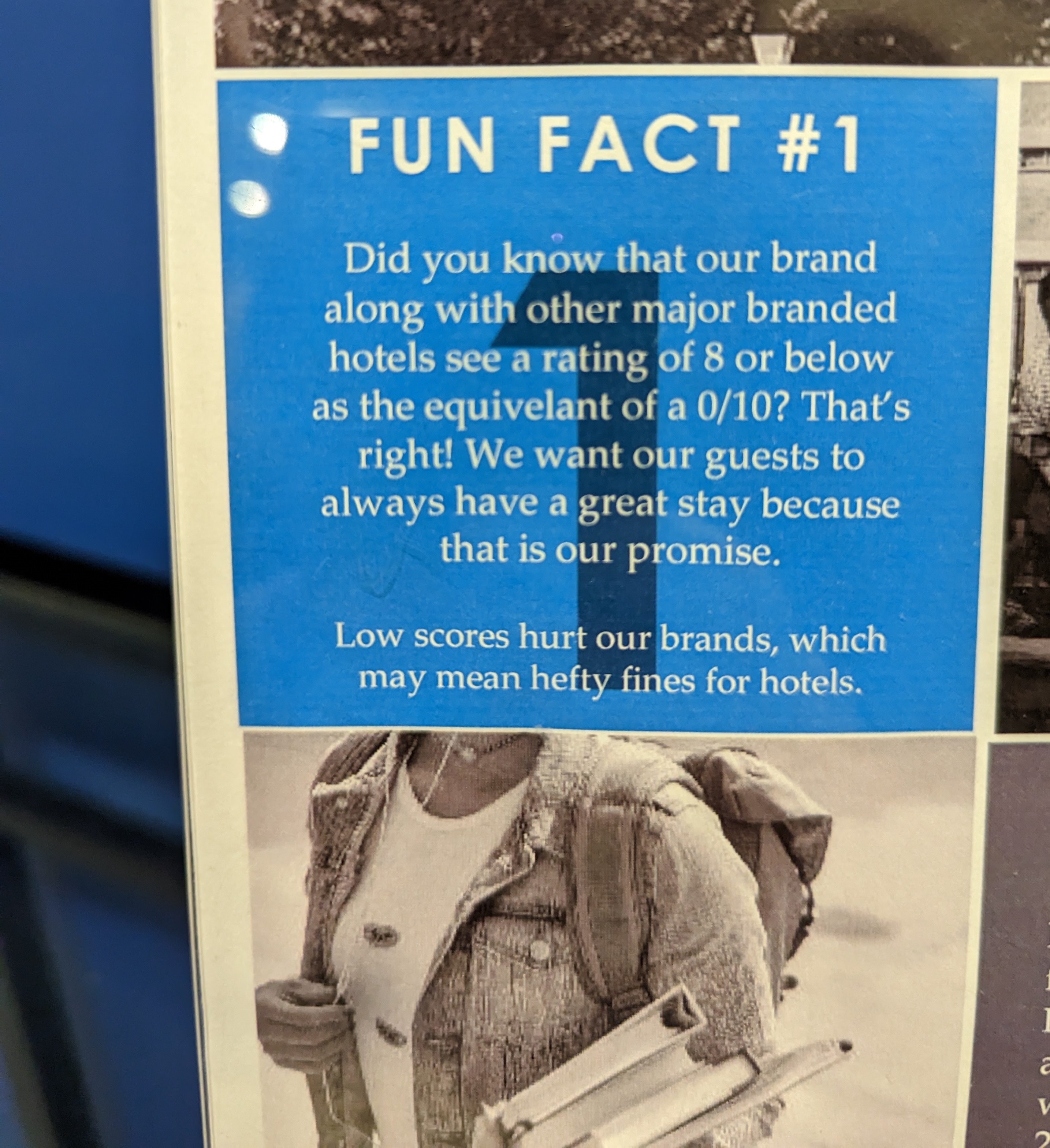

The very first "Fun Fact" was this:

Yikes! Many of you will pick up on the less than subtle nudge here to give a 9 or 10 on the NPS survey that is sure to come via email post-stay. What can we take away from this?

Many companies either have or are implementing VoC (Voice of the Customer) programs, often in the form of surveys. Whether it's NPS, CSAT, CES, or some other survey type, a contact center interaction is often the trigger for that survey. For those using a contact center interaction as the survey trigger, the majority are assigning survey results to the agent who interacted with the customer - it's often a scorecard metric. In some cases, it's a metric that can impact the agent's compensation.

This is problematic for 3 reasons:

Reason #1

Regardless of how the survey is worded, customers are focused on the problem that prompted the interaction in the first place. Even the best agents may get penalized if your processes or products are faulty and they feel the need to contact you about it. Agents aren't packing up items in a warehouse or the cause of shipping delays. They aren't manufacturing faulty products or making coding errors. Any of these factors, regardless of agent performance, can negatively influence survey results.

Reason #2

Financial incentives often cause unintended behaviors, and those behaviors may be the opposite of what you really want to happen. While survey results can paint a picture of the overall job agents are doing, if the results impact agent pay, agents will often attempt to game the system. Maybe it's by using less than subtle nudges like this hotel. Maybe it's by directly asking for positive feedback. Maybe the agents transfer upset customers to someone else just so they don't get the survey! None of that serves the purpose of customer feedback.

Reason #3

The point of a metric like NPS is to gauge customer loyalty, not agent performance. CSAT and CES are more directly linked to agent performance, but brand loyalty has an incredibly loose affiliation with any one experience. For instance, I have zero loyalty to this hotel brand. It's not the hotel's fault; we chose it because of location. Am I going to recommend this hotel or this brand to anyone? Nope. Not at all. And that has no reflection on my stay experience. The hotel was fine. It served our needs and met my expectations in nearly every way. But meeting my expectations alone doesn't make me a loyal customer. In fact, I've already booked my next business trip to Las Vegas and I'm staying somewhere else because another hotel will better serve my needs this time around.

"But Rob," you're thinking, "are you saying we shouldn't listen to our customers?" Of course not! In fact, you should solicit regular feedback from your customers and, more importantly, act on it. You can do this in a variety of ways, including by using surveys. You also can use sentiment analysis to understand when (and why) your customers are unhappy with you.

This can be even more effective because surveys typically have an incredibly low response rate. If you rely only on surveys, you're only hearing from those who choose to respond. But whatever you do, don't use those measures to incentivize agents