Picture a world where generative AI, conversational AI and large language models (LLM) reinvent customer service and the agents’ role in contact centers. Now picture the opposite, where over-promised benefits and service errors squeeze these technologies into a reduced, complementary role. Multiple companies are selling the benefits with gusto and market adoption is underway. A market snapshot helps compare the benefits to the risks and identify possible outcomes even as the market quickly evolves.

5 Real Benefits

1. Better, stronger, faster. Multiple improvements will be like the $6M man. Reduced AHT due to better predicting intent. Improved targeting and FCR due to a deeper understanding of the context, resulting in a transfer to the right agent if needed. Agents no longer need to write post session chat or call summaries as those will generate automatically. Agents spend less time searching DB's for the answers to specific questions as their UI shows real-time answers during the session.

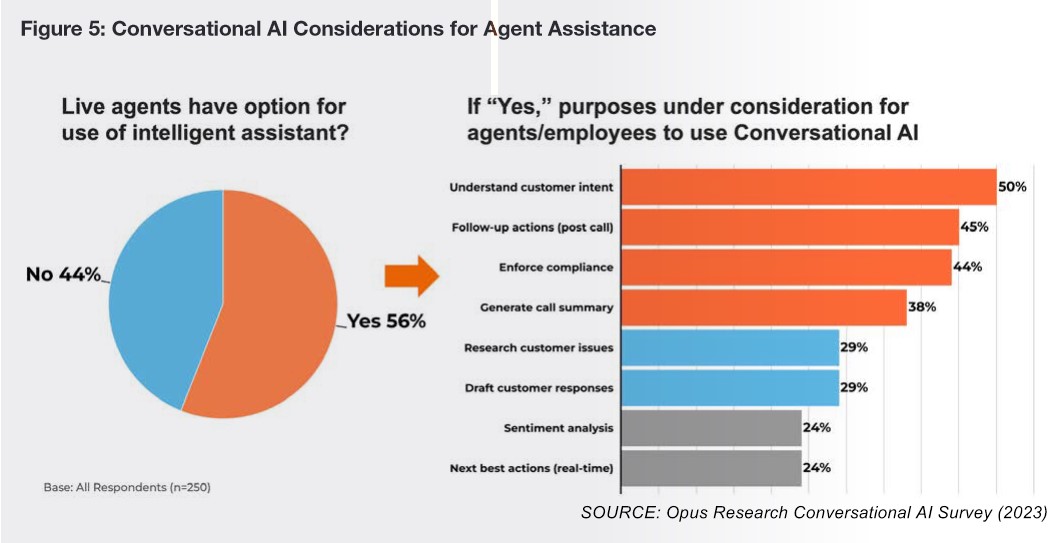

Chatbots that are actually true virtual assistants that can have a real customer service dialogue. In the past, many offered a few pre-scripted answers, then the bot would offer to transfer you to an agent. Now there can be more of a genuine conversation, which will boost CSAT. This Opus Research graphic shows the strikingly broad scope of pre-session and post-session benefits.

2. Just say 'Better RPA'. With generative AI, back-end, behind the scenes processes will automate and accelerate even more, further streamlining the back-office process. For example, automatically generated pre-session summaries mean that the agent can now simply obtain a consolidated voice or text summary of multiple past customer sessions versus reading all previous session notes. Done. Data entry and order processing. Done.

Ticket tagging and triaging. Done. These tasks were automated to some degree, but gen AI will expand and accelerate that automation process for back-office tasks. Back-office manifestations lower CX risks and create more control and easier performance measurability based on KPIs.

3. LLM’s generate more self-service options. Previously, task or issue-specific answers had to be pre-scripted. Then, traditional artificial intelligence and machine learning used rules to match questions and the answers served up to the customer. Virtual assistants tapping conversational AI and LLMs can better understand the true context, thus serving up more accurate answers and proceeding with a dialogue if necessary.

LLMs boost automation and elevate FCR, reducing customer service costs. Studies say a 20-30% increase of automated answers is feasible. As the ‘large’ portion of LLM is very true, some companies going ‘all-in’ are incorporating RAG (Retrieval Augmented Generation), which involves integrating company documents into the LLM to elevate response accuracy.

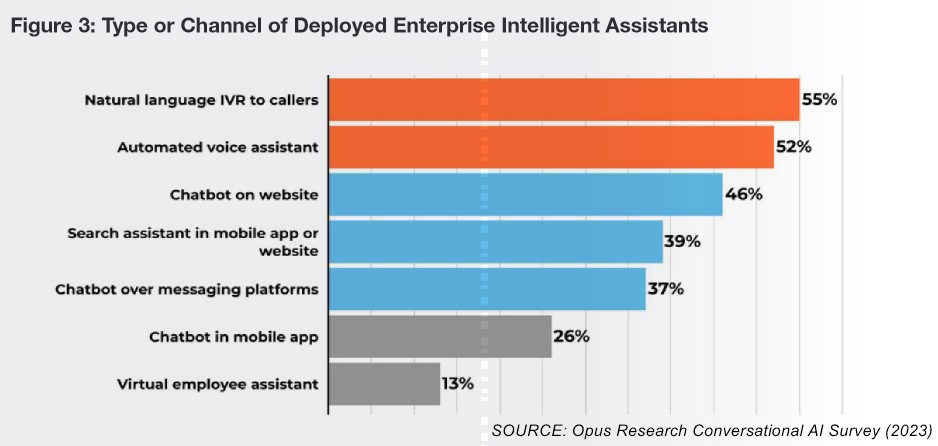

4. Gen AI and conversational AI manifest in both chatbot and Voice Interactions. Evidence shows that it’s being utilized in both text and voice dialogues via intelligent virtual agents. The Opus Research graph below shows that voice surpasses text slightly, but the takeaway is that companies are utilizing these intelligent assistants across multiple channels in multiple forms. Even messaging and mobile apps show that multiple channels are already in use.

5. Boosted quality and quantity with better answers to common FAQs. What’s striking is the number of ways that gen AI and LLM’s can be leveraged here. First, they can better predict the most common FAQs faster via boosted natural language understanding (NLU), data collection and real-time translation. With custom LLMs, they can serve up targeted questions and answers to specific audiences.

The breadth is surprising and helps you understand the potential ROI here. Agents can get answers served up on their screen in virtually real-time. The most surprising example was one company that I interviewed was, as part of its valuable service, delivering FAQs and answers to their clients for posting on the client’s web site. Faster answers to more questions elevate CSAT, boost NPS and strengthen customer loyalty.

4 Potential Risks

1. No 'guardrails' can result in PR disasters. This occurs when uncontrolled gen AI automation takes conversations in the wrong direction. A recent example occurred when a frustrated customer discerned that he was talking to a gen AI bot. He then asked for 5 reasons why that international shipping company was terrible. The gen AI bot wrote a perfect answer. The person then asked several funny questions, and the gen AI bot answered them all quite well, one in haiku form as instructed, another with swear words as requested. Each answer belittled the company repeatedly. Screenshots of the dialogue went viral in articles reaching millions of readers, creating a PR nightmare.

2. The risks and rewards of proximity to Microsoft, Google and Amazon. You can make the argument that proximity to companies driving Generative AI and LLMs increase your chance of success. The CMO of a successful CCaaS (contact-center-as-a-service) provider told me “Vendors who are not developing their own gen AI are working on borrowed time. Google, Microsoft and Amazon may eventually own this space one way or another." I find that convincing if Conversational AI and LLMs manifest themselves at full scale in the multiple use scenarios people are now proposing. It morphs the perpetual ‘build vs. buy’ discussion.

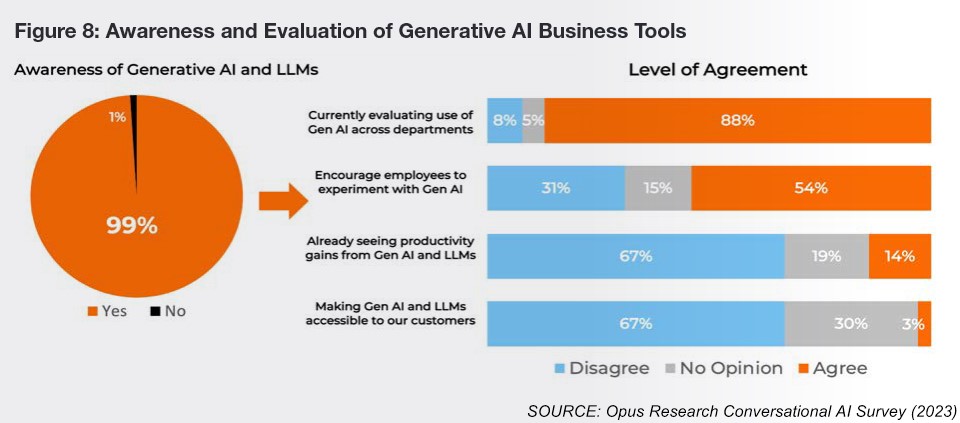

3. Fully understanding ROI before proceeding. No one can specify the exact CCTR outcome, but a range of financial and human advantages seems possible. More companies today are in ‘evaluate and plan’ mode vs. ‘deploy and track’ mode. Multiple market studies have shown that customers still generally prefer live agents over chatbots, resulting in companies taking a measured approach. A 2023 survey by Opus Research confirms this market status of heavy gen AI awareness, but the majority are still early in running live end-user deployments:

Dan Miller, Lead Analyst at Opus Research explains, “Refinements in LLMs and specialized language models (SLMs) are coming to market at unprecedented speed, but executives indicate that this is just the beginning of a years’ long process of identifying the best use cases and deploying appropriate AI-based solutions.”

4. Will agents actually use these assistance tools? It’s 2 questions really. The first is will the company take the time and tools needed to adequately train their agents on usage. The second and most vexing to me is will the agent actually take the time to read possible customer answers, choose 1, and then use it in their dialogue? Having been in contact centers, the agent already has much to manage in their UI during a customer session. Now they may need to read 1-3 possible answers to the customer’s question and decide which one is the best fit. Watching live agents in the past, as agent-assist tools are a common manifestation of gen AI, will agents actually take the time needed to use them as designed?

3 Unanswered Questions

1. Will gen AI/conversational AI replace human agents? Not any time soon, but in a few decades, we can't say. Like self-driving cars, the idea is simple. However, effective, positive, consistent and reliable execution is another thing considering today’s international contact centers. It could be 15 years ... or never.

2. Will generative AI realize the full customer service benefits people are pitching? I’ve heard amazing, motivating examples already. There’s no doubt that it will be utilized. However, I have also heard of hallucinations, incorrect answers and questionable ROI considering the pace at which the technology is accelerating. Companies may choose to implement in a ‘blended’ agent+AI scenario vs. turning over total control, thus reducing risks. The final benefits are TBD, but the journey as the market advances will not be boring!

3. Just Where Will We Be in 2 Years? A leading CCaaS executive in a recent webinar was asked just what conversational AI would look like in customer service in 2 years. Their answer was dead on... “we're not 100% sure”. Not exciting, but so, so true. They went on to explain how it’s now progressing. The implications are to develop full plans and then progress in incremental steps. Adopt new elements, learn lessons, make adjustments, then adopt more. Lather, rinse, repeat.

That's a Wrap

While different companies are adopting gen AI for customer service at different speeds, there’s no doubt the trend will continue in 2024. If it works as promised, I believe the biggest benefit will be smoother CX and with it a boosted customer loyalty and CSAT. The cost savings are nice, but loyalty is what companies are willing to invest in. Increased NPS, both pre- and post-purchase, is the ‘holy grail’ that companies will first want to see proof of. As Conversational AI matures, if more companies and thus customers use it, it will become more the standard than the exception.

In short, I am a human being with years of experience in customer service technology sharing these insights. At least, I think so. Opus Research is a recognized analyst firm known for its expertise in conversational AI and customer service technologies. Opus graphics were utilized with permission from their report: ‘2023 Conversational AI Intelliview.'