By

Jarrod Davis

|

Date Published: March 01, 2023 - Last Updated July 12, 2023

|

Comments

Knowledge management (KM) has two key components - the delivery side, which is agent and customer facing, and the editorial side for managing content, including archiving, versioning, languages, and formatting. The editorial side is key and, critically, what differentiates dedicated KM from generalized document management like SharePoint.

Knowledge management (KM) has two key components - the delivery side, which is agent and customer facing, and the editorial side for managing content, including archiving, versioning, languages, and formatting. The editorial side is key and, critically, what differentiates dedicated KM from generalized document management like SharePoint.

However, the agent- and customer-facing sides are dead; most people just don’t know it yet. To put it in contact center tech terms, they are siloed solutions that contain information within a specific user interface though it’s needed elsewhere.

But as the cyberpunk in me likes to remind people, information wants to be free. That means it should be managed within your KM system, but not used and delivered there. Life is out on the streets, or for contact centers out in dozens of channels. Agents don’t want to switch tabs and read a wall of text and neither do customers. Knowledge management solutions are just that - management. Traditionally, they have been mixed with delivery mechanisms, as well, but it was never the best solution.

There are three technologies that collectively put the final nail in the coffin of the agent- and customer-facing KM. And they all exist right now and can be deployed. They are:

- Semantic Search (incl. vector search)

- Agent Assistance powered by Conversational AI

- Large Language Models

The second two are used in combination, so I’ll start with semantic search.

Semantic/Vector vs. Keyword Search for Non-Techies

Classic or keyword-based search is the traditional and currently most common approach, in which a query is matched against a document's metadata (title, descriptions, keywords), or with text within the document. The assumption is that documents containing the same keywords as the search text are likely to be relevant.

That assumption is partially true, but dangerous. What happens with “tip of your tongue syndrome” when you can describe exactly what you’re looking for but you don’t know the right terms or document title?. It’s the equivalent of saying, “You know what's-his-name…he lives nearby, has brown hair, has the dalmatian and works for such and such a company?” But for the life of you, you just can’t remember his name.

Classic search fails here because you can’t search well conversationally using related terms. Granted, KM systems have indeed taken steps to address this and some do get by, but ultimately they’re at the mercy of the search algorithm.

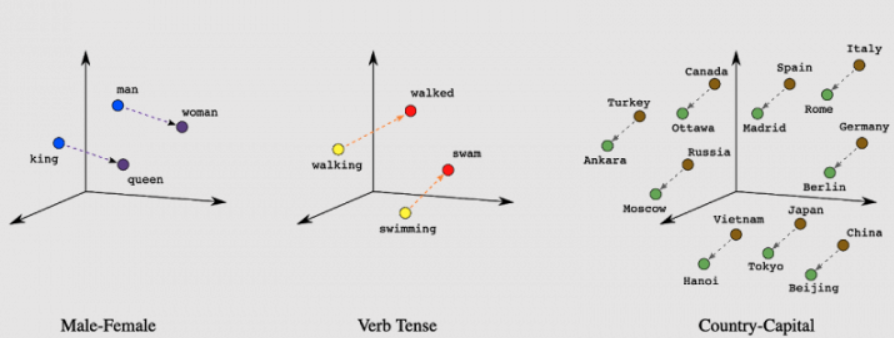

Semantic search includes multiple technologies, including vector embeddings and machine learning. It is a more advanced approach which considers the actual content of the document, including text, images, audio, video and the relationships between them. This technique takes into account factors like the context, meaning, and intent behind the search. It plots the distance (vector) between different data points to better understand relationships. This simple chart from Algolia explains it visually:

Source

In simple terms, classic search is like looking for a needle in a haystack, while semantic search is like having a personal assistant who knows exactly what you're looking for and finds it for you. Classic search relies on specific keywords, while semantic search looks for nuanced connections between ideas and concepts.

Semantic search is what enables Amazon, Netflix, Google and others to deliver personalized and more relevant recommendations and search results. That’s why Netflix can make better movie recommendations than your KMS can with support content. Until recently, the cost and complexity of this technology put it out of reach for those without significant resources. This is no longer the case.

For full disclosure, a hybrid of both models also exists and often an excellent solution, but I’m focusing on high level here and will intentionally skip further detail.

So, why does this make the customer and agent facing side of current KM systems irrelevant? Let’s first look at the next two points that focus on delivery.

Conversational AI & LLMs

Conversational AI (CAI) may seem out of place here, but bear with me. In essence, it creates a natural language interface for interacting with computers. If that sounds odd, it simply means you can talk to software instead of using your mouse, keyboard, and monitor to carry out tasks.

While that may sound like Siri or Alexa, the true power comes with the automation and orchestration capabilities included. Talking to a computer via the phone, chat, or any self-service solution has no value if you can’t actually get things done like change your flights, get a refund or make an order. So behind the scenes, CAI sits on top of your tech stack like a meta layer, moving data and actions back and forth between them all. In the case of changing travel plans, that allows a customer to call and be verified and authenticated from CRM data; on the contact center end, it then can access your systems’ booking data, make changes, take data from both systems and automatically summarize it, fill out a case, and do all the after call work. In action, it’s pure magic.

Large Language Models (LLMs) come into play here because they can be leveraged inside agent assistance tools which CAI offers. So the CAI system will listen in on voice or chat interactions, transcribe the conversation and simultaneously ascertain the issue and intent. LLMs augment the language understanding, take a standard response or KM article, and then summarize and reword it based on the customer’s context and situation. Imagine turning every KM article into a hyper-personalized answer without having to write and maintain 50,000 personalized variations!

Knowledge Delivery via Agent Assistance and In-Channel

Wrapping things up, agents are increasingly using advanced AI-powered agent assist tools to surface all relevant case data in real time during all interaction types. When knowledge pops up directly on the agent desktop a split second after the customer has explained the issue, there’s simply no need to switch over to a different system, perform a search, and then manually read through and summarize the data for the customer.

Similarly, when customers interact via self-service in a voice or text-based channel, in most cases they’ll want the information in that same channel. No customer ever asked for a 15-page PDF in small type; they want a direct but conversational answer, the same way ChatGPT can deliver. Conversational AI augmented by an LLM can deliver exactly that in the channel, or even automatically generate an email to the customer with the summary. If you must, it can include the full document as an attachment for reference.

The combination of semantic search (which is also used in LLMs) combined with Conversational AI and LLMs means an end to the standardized walls of text we all despise. This technology trifecta is essentially the tl;dr version of KM.

The technology is already here and the costs are low enough for adoption. The question is whether existing vendors will innovate, or we’ll have to wait for a startup to disrupt the KM market.